Quick instruction on how to deploy Uptime-Kuma on your Kubernetes cluster.

Uptime-Kuma is an easy to deploy and easy to use monitoring tool, that has several “Monitoring Types” like HTTP(s), port-checking, DNS and some more. Also it includes some real nice alerting mechanisms as Mail, Teams and Gotify. If you want to find out more about Uptime-Kuma, you can find some here.

I’ve created a set of manifests, that will deploy Uptime-Kuma with all needed components on your Kubernetes cluster. You can find the most updated code in my Github repository. Just apply it using kustomization like kubectl apply -k overlays/dev.

If you want to go step-by-step, read along below. You can apply the manifests in the given order after you’ve created them.

First we create a separate Namespace for Uptime-Kuma named kuma. Feel free to change the name to your needs.

kind: Namespace

apiVersion: v1

metadata:

name: kumaThen we create a Service for Uptime-Kuma which will listen on port 3001. The selector will be app: uptime-kuma.

apiVersion: v1

kind: Service

metadata:

name: uptime-kuma-service

namespace: kuma

spec:

selector:

app: uptime-kuma

ports:

- name: uptime-kuma

port: 3001Now it’s time for the centerpiece our Statefulset which will describe the actual Deployment and a persistent volume.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: uptime-kuma

namespace: kuma

spec:

replicas: 1

serviceName: uptime-kuma-service

selector:

matchLabels:

app: uptime-kuma

template:

metadata:

labels:

app: uptime-kuma

spec:

containers:

- name: uptime-kuma

image: louislam/uptime-kuma:1.11.4

env:

- name: UPTIME_KUMA_PORT

value: "3001"

- name: PORT

value: "3001"

ports:

- name: uptime-kuma

containerPort: 3001

protocol: TCP

volumeMounts:

- name: kuma-data

mountPath: /app/data

volumeClaimTemplates:

- metadata:

name: kuma-data

spec:

accessModes: ["ReadWriteOnce"]

volumeMode: Filesystem

resources:

requests:

storage: 1GiThe Ingress rule that is getting deployed is configured for Nginx as an Ingress Controller. You might need to adapt it to your needs. Also an annotation for Cert-Manager is included. If you don’t have a Cert-Manager setup, you don’t need this annotation. You can find an instruction for both, setting up Nginx and Cert-Manager here and here (both only in german).

Also you have to configure your specific DNS name you want to use for Uptime-Kuma in the host(s) sections.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kuma

namespace: kuma

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTP"

cert-manager.io/cluster-issuer: "letsencrypt-staging"

spec:

tls:

- hosts:

- uptime-kuma.mydomain.de

secretName: uptime-kuma.mydomain.de

rules:

- host: uptime-kuma.mydomain.de

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: uptime-kuma-service

port:

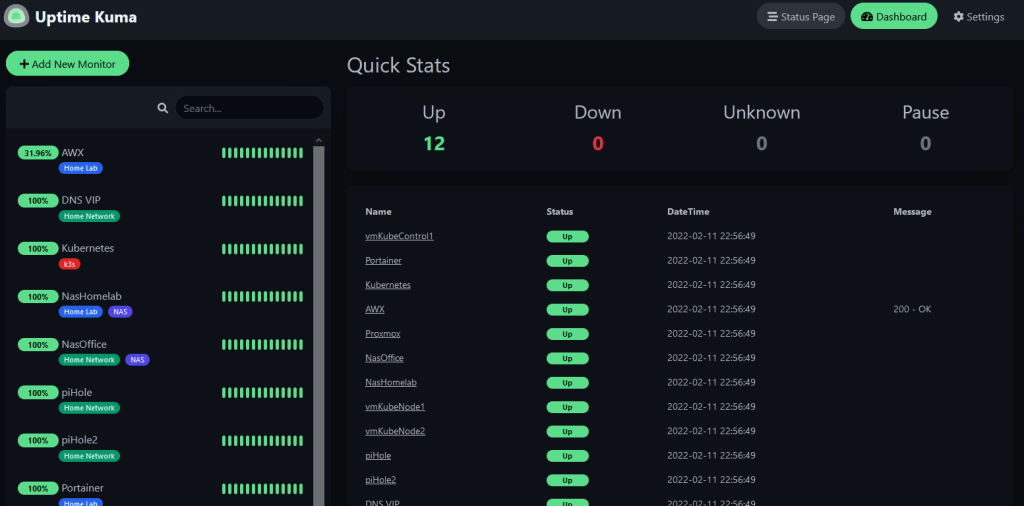

number: 3001After deploying all manifests, you should be able to get connected to Uptime-Kuma (you of course have to configure the DNS name on your Networks DNS Server). On first login, you have to create an admin user. After this is done, you are redirected to the dashboard. Have fun playing around with Uptime-Kuma!

Philip

Update 02.06.2022: I’ve added some more overlays to my Github repository regarding Uptime Kuma. So you may now decide, if you want to deploy Kuma using an Ingress, Loadbalancer or Nodeport configuration. I’ve made this for you guys who haven’t have an Ingress Controller setup.

Hi,

thank you for this, why do you use a sts and not a deployment?

best regards

Manuel

Hello, thanks for your feedback. The reason I’m using a statefulset is, that in my Homelab setup, the storage / volume is not attacheable to all k8s-nodes. As far as I know, the pods of a statefulset will not change the node after initial deployment. A deployment would do so. So if a node fails in my setup with kuma running on it, it will not be started on another node. If your setup differs from mine, you also could use a deployment in interchange for a statefulset for this.

Kind regards

Philip

Hi Philip,

thank you for your explanation, that makes sense.

Some K8s-Distros (in my case Azure) does have an awareness for that, so the pod wouldn’t be started on a node where it can’t reach the storage.

kind regards

Manuel

Hello, thank you very much for sharing the manifests, I am having a problem and it is not starting the application, I see the logs of the pod and it is up to here

==> Performing startup jobs and maintenance tasks

==> Starting application with user 0 group 0

Welcome to Uptime Kuma

Your Node.js version: 16

2022-06-02T14:55:25.958Z [SERVER] INFO: Welcome to Uptime Kuma

2022-06-02T14:55:25.958Z [SERVER] INFO: Node Env: production

2022-06-02T14:55:25.958Z [SERVER] INFO: Importing Node libraries

2022-06-02T14:55:25.960Z [SERVER] INFO: Importing 3rd-party libraries

2022-06-02T14:55:26.886Z [SERVER] INFO: Creating express and socket.io instance

2022-06-02T14:55:26.888Z [SERVER] INFO: Server Type: HTTP

2022-06-02T14:55:26.895Z [SERVER] INFO: Importing this project modules

2022-06-02T14:55:27.151Z [NOTIFICATION] INFO: Prepare Notification Providers

2022-06-02T14:55:27.228Z [SERVER] INFO: Version: 1.16.1

2022-06-02T14:55:27.242Z [DB] INFO: Data Dir: ./data/

2022-06-02T14:55:27.244Z [SERVER] INFO: Copying Database

2022-06-02T14:55:27.255Z [SERVER] INFO: Connecting to the Database

Can you help me?

Hello Edwin,

can you share the following information?

– How you applied the deployment? Did you use the kustomization of my Github repo or did you apply them one by one?

– What’s the output of the following commands?

kubectl get all -n kumakubectl get ingress -n kumaKind regards

Philip

Hello, Thanks so much for sharing info. I have deployed this in a EKS environment with EBS as PV. Whenever pod restarts, I am loosing data even though I have created a PVC and mapped to /app/data. Any thoughts ?

Hello Mike,

can you share your statefulset manifest here?

Kind regards

Philip

Hi Philip,

I found the issue, I was referencing data path incorrectly as I have built my custom image, it was at a different location not /app/data. Thanks for taking time.